What if AI judged you?

Without knowing you?

The Biometric Mirror is a suite of provocative interactive systems that expose how AI sees, classifies, and judges us. By turning your data into instant labels, scores, or predictions, it sparks reflection on bias, fairness, and accountability in automated decision-making.

Why it matters

AI is already used to decide who gets a loan, a job, or even parole. These systems claim to be objective, yet they often replicate (or amplify) the biases of their designers and datasets. The Biometric Mirror challenges this myth of neutrality, confronting us with a simple but urgent question: Should we trust machines to define who we are?

The experience

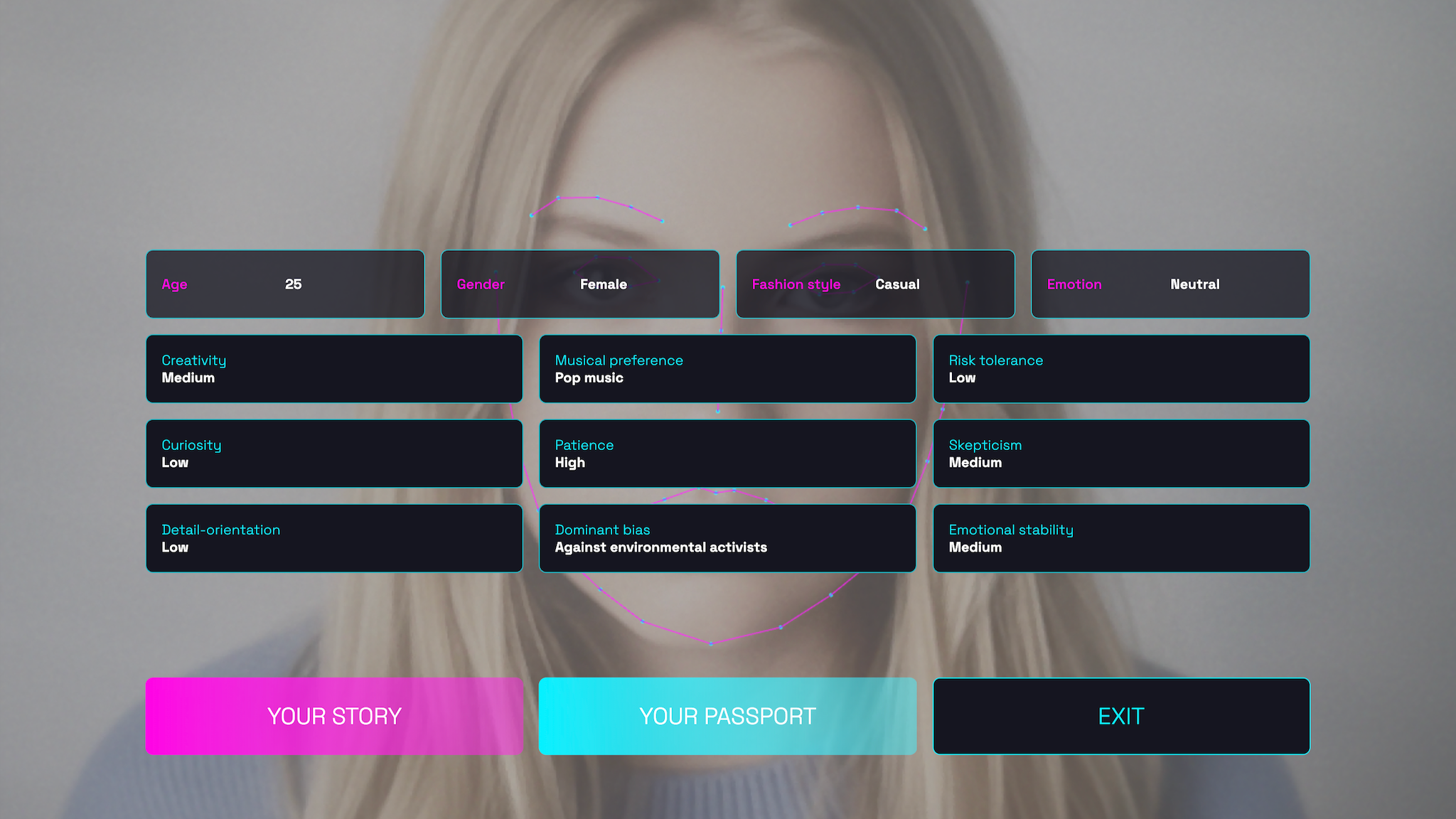

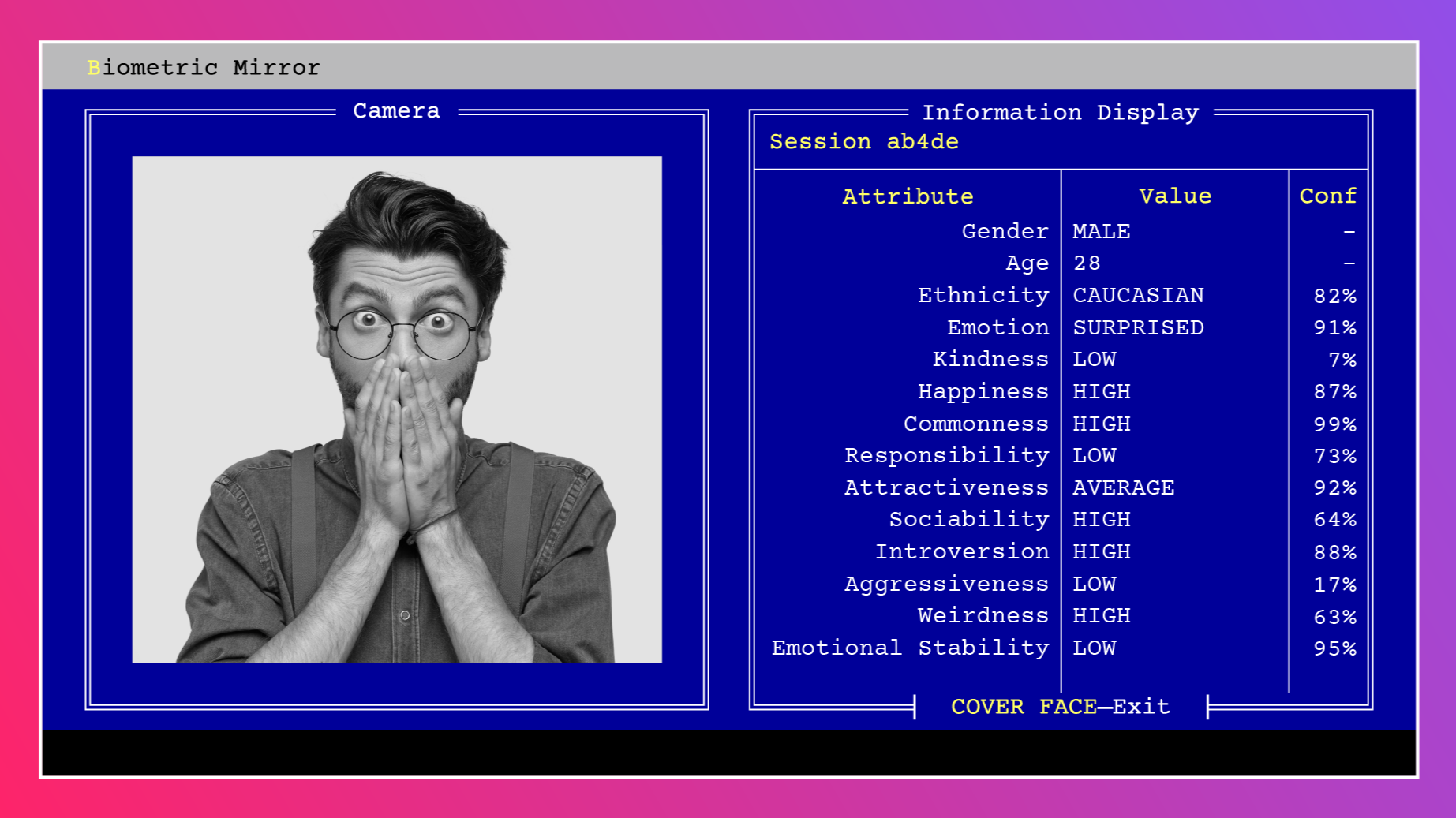

Through playful yet confronting installations, participants watch themselves being assessed in real-time by AI systems that claim to measure traits like attractiveness, competence, trustworthiness, yes, even their preferred music genre. This immediate feedback (part game, part warning) forces us to reflect on how it feels to be judged by an algorithm.

Public impact

By making algorithmic processes visible and tangible, the Biometric Mirror empowers audiences to take part in the debate about the role of AI in society. It sparks conversation, fuels public awareness, and highlights the stakes of letting automated systems influence critical human decisions.

The bigger picture

At its core, the Biometric Mirror is not just about technology. It’s about values, fairness, and accountability. It asks: if AI systems are shaping our futures, who gets to design them, and who bears the risks when they get it wrong?

Meet the Mirrors

The Biometric Mirror exists in multiple forms, each one exposing a different way algorithms attempt to know us. These works are not products or prototypes; they are provocations. Each installation takes a familiar promise of AI and pushes it into public space, forcing us to ask: what happens when machines judge, misread, or redefine who we are?

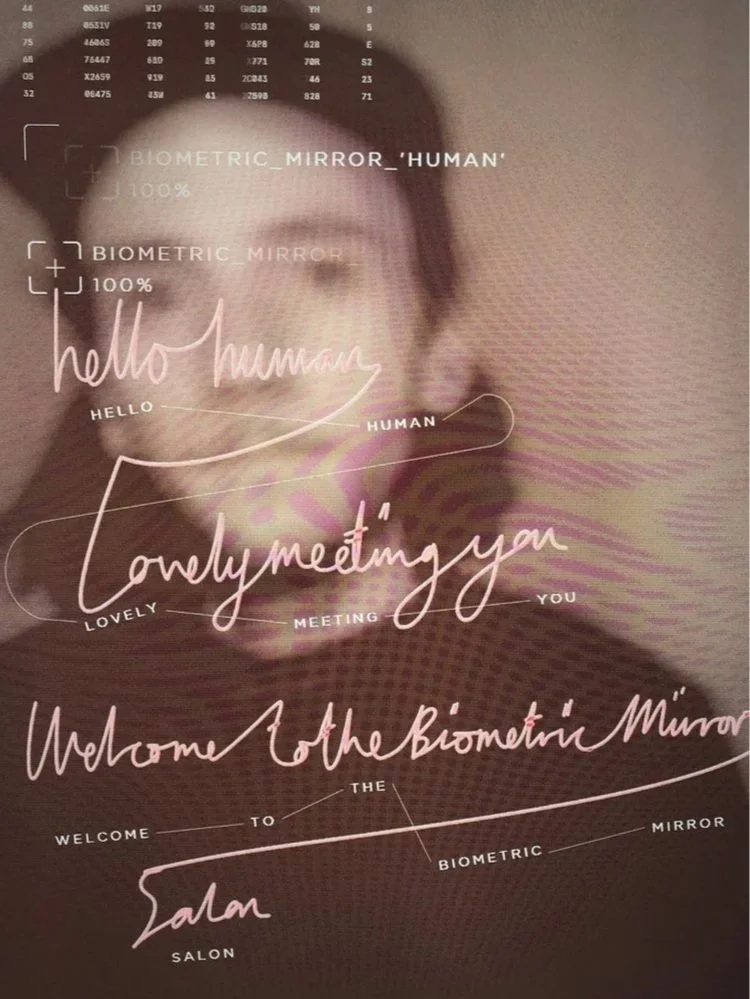

Biometric Mirror,

University of Melbourne

The first Mirror turned facial recognition into a tool of self-confrontation. Participants watched as their portrait was instantly translated into personality traits: ambitious, neurotic, and extroverted. The bluntness of the judgment revealed both the seduction and absurdity of reducing people to data.

Face Value, University of Technology Sydney

Here, the Mirror examined emotion and trust detection technologies, which are increasingly used in policing, hiring, and access control. By turning dubious science into an interactive encounter, it exposed how easily human complexity is reduced to signals that machines claim to read.

Beauty Temple,

Science Gallery

Set within the aesthetics of a futuristic salon, this work, created with Lucy McRae, allowed visitors to submit to the algorithm’s gaze and receive a prescription for beauty. In doing so, it questioned how much we are willing to outsource our bodies and identities to systems that pretend to know what “better” looks like.

Biometric D&D,

University of Melbourne

The Mirror enters the realm of fantasy roleplay: a photo shapes your Dungeons & Dragons character, including stats, class, and alignment. But what should be whimsical fun is a test of trust. The Mirror substitutes imagination with facial recognition and asks us to confront how much identity can be substituted by algorithmic reading. What happens when “good vs evil” becomes a data point?

How They See Us, SparkOH! Belgium

This iteration shifts the Mirror from the realm of measurement into the realm of care. Visitors engage with a virtual therapist, designed to respond to their emotional state and provide guidance. At first, the interaction seems supportive; machines offering empathy, reassurance, and advice. But as the conversation unfolds, its limitations become clear: the therapist is less a listener than a classifier, slotting human feelings into predefined categories.

By exposing this tension, How They See Us reveals the unsettling implications of outsourcing intimacy and care to algorithmic systems. When our vulnerabilities are reduced to data points, the act of “helping” becomes an act of sorting. The installation raises a critical question: what does it mean when our deepest needs for care and connection are mediated by systems that do not, indeed cannot, understand us?

Experience Biometric Mirror

July - September 2025: Tic, Tac, Tech, SparkOH! Frameries, Belgium

February - November 2022: Invisibility, Museum of Discovery, Adelaide, Australia

August 2020 - July 2021: Shifting Proximities, NXT Museum, Amsterdam, The Netherlands

March - June 2020: Bodydrift, Anatomies of the Future, Design Museum Den Bosch, The Netherlands

February - March 2020: AsiaTOPA 2020, NExT Lab, The University of Melbourne, Australia

November 2019: Law, Justice and Development Week 2019, The World Bank, Washington DC, USA

June - October 2019: Perfection, Science Gallery Dublin, Ireland

August 2019: Ethics of Artificial Intelligence, National Science Week, Adelaide, Australia

August 2019: Melbourne Entrepreneurship Gala, Melbourne, Australia

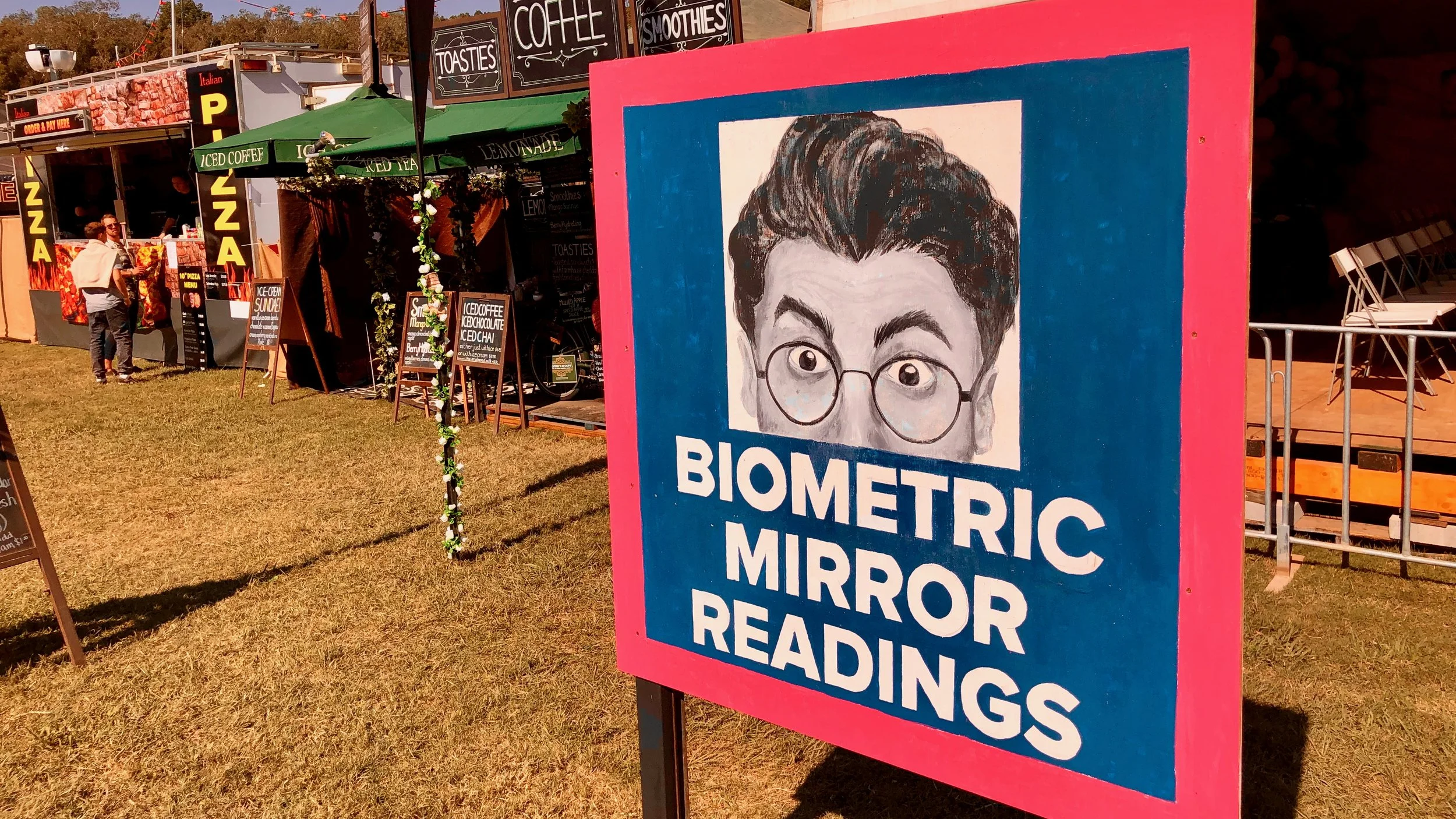

July 2019: Splendour in the Grass, North Byron Parklands, Australia

July 2019: World Economic Forum Annual Meeting of the New Champions, Dalian, China

February 2019: PauseFest, Melbourne, Australia

September - November 2018: Perfection, Science Gallery Melbourne, Australia

Press Features

10Play The Project, 26 June 2025: How the World Will Look if AI Continues to Take Over

ABC Four Corners, 23 July 2021: TikTok: Data Mining, Discrimination and Dangerous Content on the World's Most Popular App

Al Jazeera, 1 August 2019: All Hail the Algorithm

ABC News, 1 October 2018: I Went in Search of the 'Perfect Face' and Discovered the Limits of AI

Australian Financial Review, 20 August 2018: How Far Will We Go to Look Good in This Instagram Age?

BBC News, 15 August 2018: Biometric Mirror: Reflecting the Imperfections of AI

The Washington Times, 3 August 2018: Would You Hire This Face? Facial Recognition Technology Says Maybe Not

Sydney Morning Herald, 1 August 2018: This Algorithm Says I'm Aggressive, Irresponsible and Unattractive. But Should We Believe It?

World Economic Forum, 30 July 2018: This AI Judges You Based on the Biases We've Instilled it With

ComputerWorld, 26 July 2018: Biometric Mirror Reflects AI's Worrying Potential

Buzzfeed, 24 July 2018: This AI Machine Will Make Really Crappy Judgments About You, Here's Why It's Important

University of Melbourne Newsroom, 24 July 2018: Biometric Mirror Highlights Flaws in Artificial Intelligence

Publications

Proceedings of the 20th International Conference on the Foundations of Digital Games, Melissa J Rogerson, Sasha Soraine and Niels Wouters: A Surfeit of Barbarians: Using Facial Recognition Technology to Generate a Dungeons and Dragons Character.

Pursuit, 2021. Niels Wouters and Jeannie Paterson: TikTok Captures your Face.

Pursuit, 2020. Niels Wouters and Ryan Kelly: The Danger of Surveillance Tech Post COVID-19.

Proceedings of the 2019 Conference on Designing Interactive Systems. Niels Wouters, Ryan Kelly, Eduardo Velloso, Katrin Wolf, Hasan Shahid Ferdous, Joshua Newn, Zaher Joukhadar and Frank Vetere: Biometric Mirror: Exploring Ethical Opinions towards Facial Analysis and Automated Decision-Making.

Pursuit, 2018. Niels Wouters and Frank Vetere: Holding a Black Mirror up to Artificial Intelligence.

The Conversation, 2018. Niels Wouters and Frank Vetere: AI Scans your Data to Assess your Character but Biometric Mirror Asks: What if it Gets it Wrong?